- Research

- Open access

- Published:

Stability of the stationary solutions of neural field equations with propagation delays

The Journal of Mathematical Neuroscience volume 1, Article number: 1 (2011)

Abstract

In this paper, we consider neural field equations with space-dependent delays. Neural fields are continuous assemblies of mesoscopic models arising when modeling macroscopic parts of the brain. They are modeled by nonlinear integro-differential equations. We rigorously prove, for the first time to our knowledge, sufficient conditions for the stability of their stationary solutions. We use two methods 1) the computation of the eigenvalues of the linear operator defined by the linearized equations and 2) the formulation of the problem as a fixed point problem. The first method involves tools of functional analysis and yields a new estimate of the semigroup of the previous linear operator using the eigenvalues of its infinitesimal generator. It yields a sufficient condition for stability which is independent of the characteristics of the delays. The second method allows us to find new sufficient conditions for the stability of stationary solutions which depend upon the values of the delays. These conditions are very easy to evaluate numerically. We illustrate the conservativeness of the bounds with a comparison with numerical simulation.

1 Introduction

Neural fields equations first appeared as a spatial-continuous extension of Hopfield networks with the seminal works of Wilson and Cowan, Amari [1, 2]. These networks describe the mean activity of neural populations by nonlinear integral equations and play an important role in the modeling of various cortical areas including the visual cortex. They have been modified to take into account several relevant biological mechanisms like spike-frequency adaptation [3, 4], the tuning properties of some populations [5] or the spatial organization of the populations of neurons [6]. In this work we focus on the role of the delays coming from the finite-velocity of signals in axons, dendrites or the time of synaptic transmission [7, 8]. It turns out that delayed neural fields equations feature some interesting mathematical difficulties. The main question we address in the sequel is that of determining, once the stationary states of a non-delayed neural field equation are well-understood, what changes, if any, are caused by the introduction of propagation delays? We think this question is important since non-delayed neural field equations are pretty well understood by now, at least in terms of their stationary solutions, but the same is not true for their delayed versions which in many cases are better models closer to experimental findings. A lot of work has been done concerning the role of delays in waves propagation or in the linear stability of stationary states but except in [9] the method used reduces to the computation of the eigenvalues (which we call characteristic values) of the linearized equation in some analytically convenient cases (see [10]). Some results are known in the case of a finite number of neurons [11, 12] and in the case of a few number of distinct delays [13, 14]: the dynamical portrait is highly intricated even in the case of two neurons with delayed connections.

The purpose of this article is to propose a solid mathematical framework to characterize the dynamical properties of neural field systems with propagation delays and to show that it allows us to find sufficient delay-dependent bounds for the linear stability of the stationary states. This is a step in the direction of answering the question of how much delays can be introduced in a neural field model without destabilization. As a consequence one can infer in some cases without much extra work, from the analysis of a neural field model without propagation delays, the changes caused by the finite propagation times of signals. This framework also allows us to prove a linear stability principle to study the bifurcations of the solutions when varying the nonlinear gain and the propagation times.

The paper is organized as follows: in Section 2 we describe our model of delayed neural field, state our assumptions and prove that the resulting equations are well-posed and enjoy a unique bounded solution for all times. In Section 3 we give two different methods for expressing the linear stability of stationary cortical states, that is, of the time independent solutions of these equations. The first one, Section 3.1, is computationally intensive but accurate. The second one, Section 3.2, is much lighter in terms of computation but unfortunately leads to somewhat coarse approximations. Readers not interested in the theoretical and analytical developments can go directly to the summary of this section. We illustrate these abstract results in Section 4 by applying them to a detailed study of a simple but illuminating example.

2 The model

We consider the following neural field equations defined over an open bounded piece of cortex and/or feature space . They describe the dynamics of the mean membrane potential of each of p neural populations.

We give an interpretation of the various parameters and functions that appear in (1).

Ω is a finite piece of cortex and/or feature space and is represented as an open bounded set of . The vectors r and represent points in Ω.

The function is the normalized sigmoid function:

It describes the relation between the firing rate of population i as a function of the membrane potential, for example, . We note V the p-dimensional vector .

The p functions , , represent the initial conditions, see below. We note ϕ the p-dimensional vector .

The p functions , , represent external currents from other cortical areas. We note the p-dimensional vector .

The matrix of functions represents the connectivity between populations i and j, see below.

The p real values , , determine the threshold of activity for each population, that is, the value of the membrane potential corresponding to 50% of the maximal activity.

The p real positive values , , determine the slopes of the sigmoids at the origin.

Finally the p real positive values , , determine the speed at which each membrane potential decreases exponentially toward its rest value.

We also introduce the function , defined by , and the diagonal matrix .

A difference with other studies is the intrinsic dynamics of the population given by the linear response of chemical synapses. In [9, 15], is replaced by to use the alpha function synaptic response. We use for simplicity although our analysis applies to more general intrinsic dynamics, see Proposition 3.10 in Section 3.1.3.

For the sake of generality, the propagation delays are not assumed to be identical for all populations, hence they are described by a matrix whose element is the propagation delay between population j at and population i at r. The reason for this assumption is that it is still unclear from physiology if propagation delays are independent of the populations. We assume for technical reasons that τ is continuous, that is, . Moreover biological data indicate that τ is not a symmetric function (that is, ), thus no assumption is made about this symmetry unless otherwise stated.

In order to compute the righthand side of (1), we need to know the voltage V on some interval . The value of T is obtained by considering the maximal delay:

Hence we choose .

2.1 The propagation-delay function

What are the possible choices for the propagation-delay function ? There are few papers dealing with this subject. Our analysis is built upon [16]. The authors of this paper study, inter alia, the relationship between the path length along axons from soma to synaptic buttons versus the Euclidean distance to the soma. They observe a linear relationship with a slope close to one. If we neglect the dendritic arbor, this means that if a neuron located at r is connected to another neuron located at , the path length of this connection is very close to , in other words, axons are straight lines. According to this, we will choose in the following:

where c is the inverse of the propagation speed.

2.2 Mathematical framework

A convenient functional setting for the non-delayed neural field equations (see [17–19]) is to use the space which is a Hilbert space endowed with the usual inner product:

To give a meaning to (1), we define the history space with , which is the Banach phase space associated with equation (3) below. Using the notation , we write (1) as:

where

is the linear continuous operator satisfying (the notation  is defined in Definition A.2 of Appendix A)

is defined in Definition A.2 of Appendix A)  . Notice that most of the papers on this subject assume Ω infinite, hence requiring . This raises difficult mathematical questions which we do not have to worry about, unlike [9, 15, 20–24].

. Notice that most of the papers on this subject assume Ω infinite, hence requiring . This raises difficult mathematical questions which we do not have to worry about, unlike [9, 15, 20–24].

We first recall the following proposition whose proof appears in [25].

Proposition 2.1 If the following assumptions are satisfied:

-

1.

,

-

2.

the external current ,

-

3.

, .

Then for any, there exists a unique solutionto (3).

Notice that this result gives existence on , finite-time explosion is impossible for this delayed differential equation. Nevertheless, a particular solution could grow indefinitely, we now prove that this cannot happen.

2.3 Boundedness of solutions

A valid model of neural networks should only feature bounded membrane potentials. We find a bounded attracting set in the spirit of our previous work with non-delayed neural mass equations. The proof is almost the same as in [19] but some care has to be taken because of the delays.

Theorem 2.2 All the trajectories of the equation (3) are ultimately bounded by the same constant R (see the proof) if.

Proof Let us define as

We note and from Lemma B.2 (see Appendix B.1):

Thus, if  , .

, .

Let us show that the open ball of of center 0 and radius R, , is stable under the dynamics of equation (3). We know that is defined for all s and that on , the boundary of . We consider three cases for the initial condition .

If and set . Suppose that , then is defined and belongs to , the closure of , because is closed, in effect to . We also have because . Thus we deduce that for and small enough, which contradicts the definition of T. Thus and is stable.

Because on , implies that , .

Finally we consider the case . Suppose that , , then , , thus is monotonically decreasing and reaches the value of R in finite time when reaches . This contradicts our assumption. Thus . □

3 Stability results

When studying a dynamical system, a good starting point is to look for invariant sets. Theorem 2.2 provides such an invariant set but it is a very large one, not sufficient to convey a good understanding of the system. Other invariant sets (included in the previous one) are stationary points. Notice that delayed and non-delayed equations share exactly the same stationary solutions, also called persistent states. We can therefore make good use of the harvest of results that are available about these persistent states which we note . Note that in most papers dealing with persistent states, the authors compute one of them and are satisfied with the study of the local dynamics around this particular stationary solution. Very few authors (we are aware only of [19, 26]) address the problem of the computation of the whole set of persistent states. Despite these efforts they have yet been unable to get a complete grasp of the global dynamics. To summarize, in order to understand the impact of the propagation delays on the solutions of the neural field equations, it is necessary to know all their stationary solutions and the dynamics in the region where these stationary solutions lie. Unfortunately such knowledge is currently not available. Hence we must be content with studying the local dynamics around each persistent state (computed, for example, with the tools of [19]) with and without propagation delays. This is already, we think, a significant step forward toward understanding delayed neural field equations.

From now on we note a persistent state of (3) and study its stability.

We can identify at least three ways to do this:

-

1.

to derive a Lyapunov functional,

-

2.

to use a fixed point approach,

-

3.

to determine the spectrum of the infinitesimal generator associated to the linearized equation.

Previous results concerning stability bounds in delayed neural mass equations are ‘absolute’ results that do not involve the delays: they provide a sufficient condition, independent of the delays, for the stability of the fixed point (see [15, 20–22]). The bound they find is similar to our second bound in Proposition 3.13. They ‘proved’ it by showing that if the condition was satisfied, the eigenvalues of the infinitesimal generator of the semi-group of the linearized equation had negative real parts. This is not sufficient because a more complete analysis of the spectrum (for example, the essential part) is necessary as shown below in order to proof that the semi-group is exponentially bounded. In our case we prove this assertion in the case of a bounded cortex (see Section 3.1). To our knowledge it is still unknown whether this is true in the case of an infinite cortex.

These authors also provide a delay-dependent sufficient condition to guarantee that no oscillatory instabilities can appear, that is, they give a condition that forbids the existence of solutions of the form . However, this result does not give any information regarding stability of the stationary solution.

We use the second method cited above, the fixed point method, to prove a more general result which takes into account the delay terms. We also use both the second and the third method above, the spectral method, to prove the delay-independent bound from [15, 20–22]. We then evaluate the conservativeness of these two sufficient conditions. Note that the delay-independent bound has been correctly derived in [25] using the first method, the Lyapunov method. It might be of interest to explore its potential to derive a delay-dependent bound.

We write the linearized version of (3) as follows. We choose a persistent state and perform the change of variable . The linearized equation writes

where the linear operator is given by

It is also convenient to define the following operator:

3.1 Principle of linear stability analysis via characteristic values

We derive the stability of the persistent state (see [19]) for the equation (1) or equivalently (3) using the spectral properties of the infinitesimal generator. We prove that if the eigenvalues of the infinitesimal generator of the righthand side of (4) are in the left part of the complex plane, the stationary state is asymptotically stable for equation (4). This result is difficult to prove because the spectrum (the main definitions for the spectrum of a linear operator are recalled in Appendix A) of the infinitesimal generator neither reduces to the point spectrum (set of eigenvalues of finite multiplicity) nor is contained in a cone of the complex plane C (such an operator is said to be sectorial). The ‘principle of linear stability’ is the fact that the linear stability of U is inherited by the state for the nonlinear equations (1) or (3). This result is stated in the Corollaries 3.7 and 3.8.

Following [27–31], we note the strongly continuous semigroup of (4) on (see Definition A.3 in Appendix A) and A its infinitesimal generator. By definition, if U is the solution of (4) we have . In order to prove the linear stability, we need to find a condition on the spectrum of A which ensures that as .

Such a ‘principle’ of linear stability was derived in [29, 30]. Their assumptions implied that was a pure point spectrum (it contained only eigenvalues) with the effect of simplifying the study of the linear stability because, in this case, one can link estimates of the semigroup T to the spectrum of A. This is not the case here (see Proposition 3.4).

When the spectrum of the infinitesimal generator does not only contain eigenvalues, we can use the result in [27], Chapter 4, Theorem 3.10 and Corollary 3.12] for eventually norm continuous semigroups (see Definition A.4 in Appendix A) which links the growth bound of the semigroup to the spectrum of A:

Thus, U is uniformly exponentially stable for (4) if and only if

We prove in Lemma 3.6 (see below) that is eventually norm continuous. Let us start by computing the spectrum of A.

3.1.1 Computation of the spectrum of A

In this section we use for for simplicity.

Definition 3.1 We defineforby:

whereis the compact (it is a Hilbert-Schmidt operator see[32]Chapter X.2]) operator

We now apply results from the theory of delay equations in Banach spaces (see [27, 28, 31]) which give the expression of the infinitesimal generator as well as its domain of definition

The spectrum consists of those such that the operator of defined by is non-invertible. We use the following definition:

Definition 3.2 (Characteristic values (CV))

The characteristic values of A are the λs such thathas a kernel which is not reduced to 0, that is, is not injective.

It is easy to see that the CV are the eigenvalues of A.

There are various ways to compute the spectrum of an operator in infinite dimensions. They are related to how the spectrum is partitioned (for example, continuous spectrum, point spectrum…). In the case of operators which are compact perturbations of the identity such as Fredholm operators, which is the case here, there is no continuous spectrum. Hence the most convenient way for us is to compute the point spectrum and the essential spectrum (see Appendix A). This is what we achieve next.

Remark 1 In finite dimension (that is, ), the spectrum of A consists only of CV. We show that this is not the case here.

Notice that most papers dealing with delayed neural field equations only compute the CV and numerically assess the linear stability (see [9, 24, 33]).

We now show that we can link the spectral properties of A to the spectral properties of . This is important since the latter operator is easier to handle because it acts on a Hilbert space. We start with the following lemma (see [34] for similar results in a different setting).

Lemma 3.3.

Proof Let us define the following operator. If , we define by . From [28], Lemma 34], is surjective and it is easy to check that iif , see [28], Lemma 35]. Moreover is closed in iff is closed in , see [28], Lemma 36].

Let us now prove the lemma. We already know that is closed in if is closed in . Also, we have , hence iif . It remains to check that iif .

Suppose that . There exist such that . Consider . Because is surjective, for all , there exists satisfying . We write , . Then where , that is, .

Suppose that . There exist such that . As is surjective for all there exists such that . Now consider . can be written where . But because . It follows that . □

Lemma 3.3 is the key to obtain . Note that it is true regardless of the form of L and could be applied to other types of delays in neural field equations. We now prove the important following proposition.

Proposition 3.4 A satisfies the following properties:

-

1.

.

-

2.

is at most countable.

-

3.

.

-

4.

For , the generalized eigenspace is finite dimensional and , .

Proof

-

1.

. We apply [35], Theorem IV.5.26]. It shows that the essential spectrum does not change under compact perturbation. As is compact, we find .

Let us show that . The assertion ‘⊂’ is trivial. Now if , for example, , then .

Then is closed but . Hence . Also , hence . Hence, according to Definition A.7, .

-

2.

We apply [35], Theorem IV.5.33] stating (in its first part) that if is at most countable, so is .

-

3.

We apply again [35], Theorem IV.5.33] stating that if is at most countable, any point in is an isolated eigenvalue with finite multiplicity.

-

4.

Because , we can apply [28], Theorem 2] which precisely states this property. □

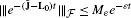

As an example, Figure 1 shows the first 200 eigenvalues computed for a very simple model one-dimensional model. We notice that they accumulate at which is the essential spectrum. These eigenvalues have been computed using TraceDDE, [36], a very efficient method for computing the CVs.

Plot of the first 200 eigenvalues of A in the scalar case (, ) and , . The delay function is the π periodic saw-like function shown in Figure 2. Notice that the eigenvalues accumulate at .

Last but not least, we can prove that the CVs are almost all, that is, except for possibly a finite number of them, located on the left part of the complex plane. This indicates that the unstable manifold is always finite dimensional for the models we are considering here.

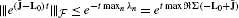

Corollary 3.5where.

Proof If and , then λ is a CV, that is, stating that ( denotes the point spectrum).

But  for λ big enough since

for λ big enough since  is bounded.

is bounded.

Hence, for λ large enough , which holds by the spectral radius inequality. This relationship states that the CVs λ satisfying are located in a bounded set of the right part of ; given that the CV are isolated, there is a finite number of them. □

3.1.2 Stability results from the characteristic values

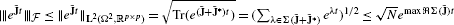

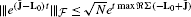

We start with a lemma stating regularity for :

Lemma 3.6 The semigroupof (4) is norm continuous onfor.

Proof We first notice that generates a norm continuous semigroup (in fact a group) on and that is continuous from to . The lemma follows directly from [27], Theorem VI.6.6]. □

Using the spectrum computed in Proposition 3.4, the previous lemma and the formula (5), we can state the asymptotic stability of the linear equation (4). Notice that because of Corollary 3.5, the supremum in (5) is in fact a max.

Corollary 3.7 (Linear stability)

Zero is asymptotically stable for (4) if and only if.

We conclude by showing that the computation of the characteristic values of A is enough to state the stability of the stationary solution .

Corollary 3.8 If, then the persistent solutionof (3) is asymptotically stable.

Proof Using , we write (3) as . The function G is and satisfies , and . We next apply a variation of constant formula. In the case of delayed equations, this formula is difficult to handle because the semigroup T should act on non-continuous functions as shown by the formula , where if and . Note that the function is not continuous at .

It is however possible (note that a regularity condition has to be verified but this is done easily in our case) to extend (see [34]) the semigroup to the space . We note this extension which has the same spectrum as . Indeed, we can consider integral solutions of (4) with initial condition in . However, as has no meaning because is not continuous in , the linear problem (4) is not well-posed in this space. This is why we have to extend the state space in order to make the linear operator in (4) continuous. Hence the correct state space is and any function is represented by the vector . The variation of constant formula becomes:

where is the projector on the second component.

Now we choose and the spectral mapping theorem implies that there exists such that  and

and  . It follows that and from Theorem 2.2, , which yields and concludes the proof. □

. It follows that and from Theorem 2.2, , which yields and concludes the proof. □

Finally, we can use the CVs to derive a sufficient stability result.

Proposition 3.9 Ifthenis asymptotically stable for (3).

Proof Suppose that a CV λ of positive real part exists, this gives a vector in the Kernel of . Using straightforward estimates, it implies that , a contradiction. □

3.1.3 Generalization of the model

In the description of our model, we have pointed out a possible generalization. It concerns the linear response of the chemical synapses, that is, the lefthand side of (1). It can be replaced by a polynomial in , namely , where the zeros of the polynomials have negative real parts. Indeed, in this case, when J is small, the network is stable. We obtain a diagonal matrix such that and change the initial condition (as in the theory of ODEs) while the history space becomes where . Having all this in mind equation (1) writes

Introducing the classical variable , we rewrite (6) as

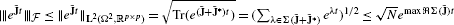

where is the Vandermonde (we put a minus sign in order to have a formulation very close to 1) matrix associated to P and , , . It appears that equation (7) has the same structure as (1): , , are bounded linear operators; we can conclude that there is a unique solution to (6). The linearized equation around a persistent states yields a strongly continuous semigroup which is eventually continuous. Hence the stability is given by the sign of where is the infinitesimal generator of . It is then routine to show that

This indicates that the essential spectrum of is equal to which is located in the left side of the complex plane. Thus the point spectrum is enough to characterize the linear stability:

Proposition 3.10 Ifthe persistent solutionof (6) is asymptotically stable.

Using the same proof as in [20], one can prove that provided that .

Proposition 3.11 Ifthenis asymptotically stable.

3.2 Principle of linear stability analysis via fixed point theory

The idea behind this method (see [37]) is to write (4) as an integral equation. This integral equation is then interpreted as a fixed point problem. We already know that this problem has a unique solution in . However, by looking at the definition of the (Lyapunov) stability, we can express the stability as the existence of a solution of the fixed point problem in a smaller space . The existence of a solution in gives the unique solution in . Hence, the method is to provide conditions for the fixed point problem to have a solution in ; in the two cases presented below, we use the Picard fixed point theorem to obtain these conditions. Usually this method gives conditions on the averaged quantities arising in (4) whereas a Lyapunov method would give conditions on the sign of the same quantities. There is no method to be preferred, rather both of them should be applied to obtain the best bounds.

In order to be able to derive our bounds we make the further assumption that there exists a such that:

Note that the notation represents the matrix of elements .

Remark 2 For example, in the 2D one-population case for, we have.

We rewrite (4) in two different integral forms to which we apply the fixed point method. The first integral form is obtained by a straightforward use the variation-of-parameters formula. It reads

The second integral form is less obvious. Let us define

Note the slight abuse of notation, namely .

Lemma B.3 in Appendix B.2 yields the upperbound . This shows that ∀t, .

Hence we propose the second integral form:

We have the following lemma.

Lemma 3.12 The formulation (9) is equivalent to (4).

Proof The idea is to write the linearized equation as:

By the variation-of-parameters formula we have:

We then use an integration by parts:

which allows us to conclude. □

Using the two integral formulations of (4) we obtain sufficient conditions of stability, as stated in the following proposition:

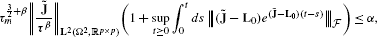

Proposition 3.13 If one of the following two conditions is satisfied:

-

1.

and there exist , such that

whererepresents the matrix of elements,

-

2.

,

thenis asymptotically stable for (3).

Proof We start with the first condition.

The problem (4) is equivalent to solving the fixed point equation for an initial condition . Let us define with the supremum norm written , as well as

We define on .

For all we have and . We want to show that . We prove two properties.

1. tends to zero at infinity.

Choose .

Using Corollary B.3, we have as .

Let , we also have

For the first term we write:

Similarly, for the second term we write

Now for a given we choose T large enough so that . For such a T we choose large enough so that  for . Putting all this together, for all :

for . Putting all this together, for all :

From (9), it follows that when .

Since is continuous and has a limit when it is bounded and therefore .

2. is contracting on .

Using (9) for all we have

We conclude from Picard theorem that the operator has a unique fixed point in .

There remains to link this fixed point to the definition of stability and first show that

where is the solution of (4).

Let us choose and such that  . M exists because, by hypothesis, . We then choose δ satisfying

. M exists because, by hypothesis, . We then choose δ satisfying

and such that . Next define

We already know that is a contraction on (which is a complete space). The last thing to check is , that is , . Using Lemma B.3 in Appendix B.2:

Thus has a unique fixed point in which is the solution of the linear delayed differential equation, that is,

As in implies in , we have proved the asymptotic stability for the linearized equation.

The proof of the second property is straightforward. If 0 is asymptotically stable for (4) all the CV are negative and Corollary 3.8 indicates that is asymptotically stable for (3).

The second condition says that is a contraction because  .

.

The asymptotic stability follows using the same arguments as in the case of . □

We next simplify the first condition of the previous proposition to make it more amenable to numerics.

Corollary 3.14 Suppose that,  .

.

If there exist, such that, thenis asymptotically stable.

Proof This corollary follows immediately from the following upperbound of the integral  . Then if there exists , such that , it implies that condition 1 in Proposition 3.13 is satisfied, from which the asymptotic stability of follows. □

. Then if there exists , such that , it implies that condition 1 in Proposition 3.13 is satisfied, from which the asymptotic stability of follows. □

Notice that is equivalent to . The previous corollary is useful in at least the following cases:

-

If is diagonalizable, with associated eigenvalues/eigenvectors: , , then and

.

. -

If and the range of is finite dimensional: where is an orthonormal basis of , then and

. Let us write the matrix associated to (see above). Then is also a compact operator with finite range and

. Let us write the matrix associated to (see above). Then is also a compact operator with finite range and  . Finally, it gives

. Finally, it gives  .

. -

If is self-adjoint, then it is diagonalizable and we can chose , .

Remark 3 If we suppose that we have higher order time derivatives as in Section 3.1.3, we can write the linearized equation as

Suppose thatis diagonalizable then whereand. Also notice that,

whereand. Also notice that,  . Then using the same functionals as in the proof of Proposition 3.13, we can find two bounds for the stability of a stationary state:

. Then using the same functionals as in the proof of Proposition 3.13, we can find two bounds for the stability of a stationary state:

-

Suppose that, that is, is stable for the non-delayed equation where. If there exist, such that

.

. -

.

To conclude, we have found an easy-to-compute formula for the stability of the persistent state . It can indeed be cumbersome to compute the CVs of neural field equations for different parameters in order to find the region of stability whereas the evaluation of the conditions in Corollary 3.14 is very easy numerically.

The conditions in Proposition 3.13 and Corollary 3.14 define a set of parameters for which is stable. Notice that these conditions are only sufficient conditions: if they are violated, may still remain stable. In order to find out whether the persistent state is destabilized we have to look at the characteristic values. Condition 1 in Proposition 3.13 indicates that if is a stable point for the non-delayed equation (see [18]) it is also stable for the delayed-equation. Thus, according to this condition, it is not possible to destabilize a stable persistent state by the introduction of small delays, which is indeed meaningful from the biological viewpoint. Moreover this condition gives an indication of the amount of delay one can introduce without changing the stability.

Condition 2 is not very useful as it is independent of the delays: no matter what they are, the stable point will remain stable. Also, if this condition is satisfied there is a unique stationary solution (see [18]) and the dynamics is trivial, that is, converging to the unique stationary point.

3.3 Summary of the different bounds and conclusion

The next proposition summarizes the results we have obtained in Proposition 3.13 and Corollary 3.14 for the stability of a stationary solution.

Proposition 3.15 If one of the following conditions is satisfied:

-

1.

There exist such that

and , such that ,

and , such that , -

2.

thenis asymptotically stable for (3).

The only general results known so far for the stability of the stationary solutions are those of Atay and Hutt (see, for example, [20]): they found a bound similar to condition 2 in Proposition 3.15 by using the CVs, but no proof of stability was given. Their condition involves the -norm of the connectivity function J and it was derived using the CVs in the same way as we did in the previous section. Thus our contribution with respect to condition 2 is that, once it is satisfied, the stationary solution is asymptotically stable: up until now this was numerically inferred on the basis of the CVs. We have proved it in two ways, first by using the CVs, and second by using the fixed point method which has the advantage of making the proof essentially trivial.

Condition 1 is of interest, because it allows one to find the minimal propagation delay that does not destabilize. Notice that this bound, though very easy to compute, overestimates the minimal speed. As mentioned above, the bounds in condition 1 are sufficient conditions for the stability of the stationary state . In order to evaluate the conservativeness of these bounds, we need to compare them to the stability predicted by the CVs. This is done in the next section.

4 Numerical application: neural fields on a ring

In order to evaluate the conservativeness of the bounds derived above we compute the CVs in a numerical example. This can be done in two ways:

-

Solve numerically the nonlinear equation satisfied by the CVs. This is possible when one has an explicit expression for the eigenvectors and periodic boundary conditions. It is the method used in [9].

-

Discretize the history space in order to obtain a matrix version of the linear operator A: the CVs are approximated by the eigenvalues of . Following the scheme of [36], it can be shown that the convergence of the eigenvalues of to the CVs is in for a suitable discretization of . One drawback of this method is the size of which can be very large in the case of several neuron populations in a two-dimensional cortex. A recent improvement (see [38]), based on a clever factorization of , allows a much faster computation of the CVs: this is the scheme we have been using.

The Matlab program used to compute the righthand side of (1) uses a Cpp code that can be run on multiprocessors (with the OpenMP library) to speed up computations. It uses a trapezoidal rule to compute the integral. The time stepper dde23 of Matlab is also used.

In order to make the computation of the eigenvectors very straightforward, we study a network on a ring, but notice that all the tools (analytical/numerical) presented here also apply to a generic cortex. We reduce our study to scalar neural fields and one neuronal population, . With this in mind the connectivity is chosen to be homogeneous with J even. To respect topology, we assume the same for the propagation delay function .

We therefore consider the scalar equation with axonal delays defined on with periodic boundary conditions. Hence and J is also π-periodic.

where the sigmoid satisfies .

Remember that (13) has a Lyapunov functional when and that all trajectories are bounded. The trajectories of the non-delayed form of (13) are heteroclinic orbits and no non-constant periodic orbit is possible.

We are looking at the local dynamics near the trivial solution . Thus we study how the CVs vary as functions of the nonlinear gain σ and the ‘maximum’ delay c. From the periodicity assumption, the eigenvectors of are the functions which leads to the characteristic equation for the eigenvalues λ:

where is the Fourier Transform of J and . This nonlinear scalar equation is solved with the Matlab Toolbox TraceDDE (see [36]). Recall that the eigenvectors of A are given by the functions where λ is a solution of (14). A bifurcation point is a pair for which equations (14) have a solution with zero real part. Bifurcations are important because they signal a change in stability, a set of parameters ensuring stability is enclosed (if bounded) by bifurcation curves. Notice that if is a bifurcation point in the case , it remains a bifurcation point for the delayed system ∀c, hence ∀c. This is why there is a bifurcation line in the bifurcation diagrams that are shown later.

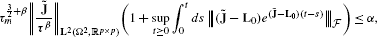

The bifurcation diagram depends on the choice of the delay function τ. As explained in Section 2.1, we use , where the lower index π indicates that it is a π-periodic function. The bifurcation diagram with respect to the parameters is shown in the righthand part of Figure 2 in the case when the connectivity J is equal to . The two bounds derived in Section 3.3 are also shown. Note that the delay-dependent bound is computed using the fact that is self-adjoint. They are clearly very conservative. The lefthand part of the same figure shows the delay function τ.

Left: Example of a periodic delay function, the saw-function. Right: plot of the CVs in the plane , the line labelled P is the pitchfork line, the line labelled H is the Hopf curve. The two bounds of Proposition 3.15 are also shown. Parameters are: , , . The labels 1, 2, 3, indicate approximate positions in the parameter space at which the trajectories shown in Figure 3 are computed.

The first bound gives the minimal velocity below which the stationary state might be unstable, in this case, even for smaller speed, the state is stable as one can see from the CV boundary. Notice that in the parameter domain defined by the 2 conditions bound.1. and bound.2., the dynamic is very simple: it is characterized by a unique and asymptotically stable stationary state, .

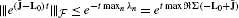

In Figure 3 we show the dynamics for different parameters corresponding to the points labelled 1, 2 and 3 in the righthand part of Figure 2 for a random (in space) and constant (in time) initial condition ϕ (see (1)). When the parameter values are below the bound computed with the CV, the dynamics converge to the stable stationary state . Along the Pitchfork line labelled (P) in the righthand part of Figure 2, there is a static bifurcation leading to the birth of new stable stationary states, this is shown in the middle part of Figure 3. The Hopf curve labelled (H) in the righthand part of Figure 2 indicates the transition to oscillatory behaviors as one can see in the righthand part of Figure 3. Note that we have not proved that the Hopf curve was indeed a Hopf bifurcation curve, we have just inferred numerically from the CVs that the eigenvalues satisfy the usual conditions for the Hopf bifurcation.

Plot of the solution of (13) for different parameters corresponding to the points shown as 1, 2 and 3 in the righthand part of Figure 2 for a random (in space) and constant (in time) initial condition, see text. The horizontal axis corresponds to space, the range is . The vertical axis represents time.

Notice that the graph of the CVs shown in the righthand part of Figure 2 features some interesting points, for example, the Fold-Hopf point at the intersection of the Pitchfork line and the Hopf curve. It is also possible that the multiplicity of the 0 eigenvalue could change on the Pitchfork line (P) to yield a Bogdanov-Takens point.

These numerical simulations reveal that the Lyapunov function derived in [39] is likely to be incorrect. Indeed, if such a function existed, as its value decreases along trajectories, it must be constant on any periodic orbit which is not possible. However the third plot in Figure 3 strongly suggests that we have found an oscillatory trajectory produced by a Hopf bifurcation (which we did not prove mathematically): this oscillatory trajectory converges to a periodic orbit which contradicts the existence of a Lyapunov functional such as the one proposed in [39].

Let us comment on the tightness of the delay-dependent bound: as shown in Proposition 3.13, this bound involves the maximum delay value and the norm , hence the specific shape of the delay function, that is, , is not completely taken into account in the bound. We can imagine many different delay functions with the same values for and that will cause possibly large changes to the dynamical portrait. For example, in the previous numerical application the singularity , corresponding to the fact that , is independent of the details of the shape of the delay function: however for specific delay functions, the multiplicity of this 0-eigenvalue could change as in the Bogdanov-Takens bifurcation which involves changes in the dynamical portrait compared to the pitchfork bifurcation. Similarly, an additional purely imaginary eigenvalue could emerge (as for in the numerical example) leading to a Fold-Hopf bifurcation. These instabilities depend on the expression of the delay function (and the connectivity function as well). These reasons explain why the bound in Proposition 3.13 is not very tight.

This suggests another way to attack the problem of the stability of fixed points: one could look for connectivity functions which have the following property: for all delay function τ, the linearized equation (4) does not possess ‘unstable solutions’, that is, for all delay function τ. In the literature (see [40, 41]), this is termed as the all-delay stability or the delay-independent stability. These remain questions for future work.

5 Conclusion

We have developed a theoretical framework for the study of neural field equations with propagation delays. This has allowed us to prove the existence, uniqueness, and the boundedness of the solutions to these equations under fairly general hypotheses.

We have then studied the stability of the stationary solutions of these equations. We have proved that the CVs are sufficient to characterize the linear stability of the stationary states. This was done using the semigroups theory (see [27]).

By formulating the stability of the stationary solutions as a fixed point problem we have found delay-dependent sufficient conditions. These conditions involve all the parameters in the delayed neural field equations, the connectivity function, the nonlinear gain and the delay function. Albeit seemingly very conservative they are useful in order to avoid the numerically intensive computation of the CV.

From the numerical viewpoint we have used two algorithms [36, 38] to compute the eigenvalues of the linearized problem in order to evaluate the conservativeness of our conditions. A potential application is the study of the bifurcations of the delayed neural field equations.

By providing easy-to-compute sufficient conditions to quantify the impact of the delays on neural field equations we hope that our work will improve the study of models of cortical areas in which the propagation delays have so far been somewhat been neglected due to a partial lack of theory.

Appendix A: Operators and their spectra

We recall and gather in this appendix a number of definitions, results and hypotheses that are used in the body of the article to make it more self-sufficient.

Definition A.1 An operator, E, F being Banach spaces, is closed if its graph is closed in the direct sum.

Definition A.2

We note

the operator norm of a bounded operator

that is

the operator norm of a bounded operator

that is

It is known see for example, [35], that

Definition A.3 A semigroupon a Banach space E is strongly continuous if, is continuous fromto E.

Definition A.4 A semigroupon a Banach space E is norm continuous ifis continuous fromto. It is said eventually norm continuous if it is norm continuous for.

Definition A.5 A closed operatorof a Banach space E is Fredholm ifandare finite andis closed in E.

Definition A.6 A closed operatorof a Banach space E is semi-Fredholm iforis finite andis closed in E.

Definition A.7 Ifis a closed operator of a Banach space E the essential spectrumis the set of λs insuch thatis not semi-Fredholm, that is, eitheris not closed oris closed but.

Remark 4[28]uses the definition: if at least one of the following holds: is not closed oris infinite dimensional or λ is a limit point of. Then

Appendix B: The Cauchy problem

B.1 Boundedness of solutions

We prove Lemma B.2 which is used in the proof of the boundedness of the solutions to the delayed neural field equations (1) or (3).

Lemma B.1 We haveand .

.

Proof

-

We first check that is well defined: if then ψ is measurable (it is Ω-measurable by definition and -measurable by continuity) on so that the integral in the definition of is meaningful. As τ is continuous, it follows that is measurable on Ω2. Furthermore .

-

We now show that . We have for , . With Cauchy-Schwartz:

(15)

Noting that , we obtain

and is continuous.

□

Lemma B.2 We have.

Proof By the Cauchy-Schwarz inequality and Lemma B.1: because S is bounded by 1. □

B.2 Stability

In this section we prove Lemma B.3 which is central in establishing the first sufficient condition in Proposition 3.13.

Lemma B.3 Letbe such that. Then we have the following bound:

Proof We have:

and if we set , we have: and from the Cauchy-Schwartz inequality:

Again, from the Cauchy-Schwartz inequality applied to :

Then, from the discrete Cauchy-Schwartz inequality:

which gives as stated:

and allows us to conclude. □

References

Wilson H, Cowan J: A mathematical theory of the functional dynamics of cortical and thalamic nervous tissue. Biol. Cybern. 1973,13(2):55–80. 10.1007/BF00288786

Amari SI: Dynamics of pattern formation in lateral-inhibition type neural fields. Biol. Cybern. 1977,27(2):77–87. 10.1007/BF00337259

Curtu R, Ermentrout B: Pattern formation in a network of excitatory and inhibitory cells with adaptation. SIAM J. Appl. Dyn. Syst. 2004, 3: 191. 10.1137/030600503

Kilpatrick Z, Bressloff P: Effects of synaptic depression and adaptation on spatiotemporal dynamics of an excitatory neuronal network. Physica D 2010,239(9):547–560. 10.1016/j.physd.2009.06.003

Ben-Yishai R, Bar-Or R, Sompolinsky H: Theory of orientation tuning in visual cortex. Proc. Natl. Acad. Sci. USA 1995,92(9):3844–3848. 10.1073/pnas.92.9.3844

Bressloff P, Cowan J, Golubitsky M, Thomas P, Wiener M: Geometric visual hallucinations, Euclidean symmetry and the functional architecture of striate cortex. Philos. Trans. R. Soc. Lond. B, Biol. Sci. 2001,306(1407):299–330.

Coombes S, Laing C: Delays in activity based neural networks. Philos. Trans. R. Soc. Lond. A 2009, 367: 1117–1129. 10.1098/rsta.2008.0256

Roxin A, Brunel N, Hansel D: Role of delays in shaping spatiotemporal dynamics of neuronal activity in large networks. Phys. Rev. Lett. 2005.,94(23):

Venkov N, Coombes S, Matthews P: Dynamic instabilities in scalar neural field equations with space-dependent delays. Physica D 2007, 232: 1–15. 10.1016/j.physd.2007.04.011

Jirsa V, Kelso J: Spatiotemporal pattern formation in neural systems with heterogeneous connection topologies. Phys. Rev. E 2000,62(6):8462–8465. 10.1103/PhysRevE.62.8462

Wu J: Symmetric functional differential equations and neural networks with memory. Trans. Am. Math. Soc. 1998,350(12):4799–4838. 10.1090/S0002-9947-98-02083-2

Bélair, J., Campbell, S., Van Den Driessche, P.: Frustration, stability, and delay-induced oscillations in a neural network model. SIAM J. Appl. Math. 245–255 (1996) Bélair, J., Campbell, S., Van Den Driessche, P.: Frustration, stability, and delay-induced oscillations in a neural network model. SIAM J. Appl. Math. 245–255 (1996)

Bélair, J., Campbell, S.: Stability and bifurcations of equilibria in a multiple-delayed differential equation. SIAM J. Appl. Math. 1402–1424 (1994) Bélair, J., Campbell, S.: Stability and bifurcations of equilibria in a multiple-delayed differential equation. SIAM J. Appl. Math. 1402–1424 (1994)

Campbell, S., Ruan, S., Wolkowicz, G., Wu, J.: Stability and bifurcation of a simple neural network with multiple time delays. Differential Equations with Application to Biology 65–79 (1999) Campbell, S., Ruan, S., Wolkowicz, G., Wu, J.: Stability and bifurcation of a simple neural network with multiple time delays. Differential Equations with Application to Biology 65–79 (1999)

Atay FM, Hutt A: Neural fields with distributed transmission speeds and long-range feedback delays. SIAM J. Appl. Dyn. Syst. 2006,5(4):670–698. 10.1137/050629367

Budd J, Kovács K, Ferecskó A, Buzás P, Eysel U, Kisvárday Z: Neocortical axon arbors trade-off material and conduction delay conservation. PLoS Comput. Biol. 2010,6(3):e1000711. 10.1371/journal.pcbi.1000711

Faugeras O, Grimbert F, Slotine JJ: Abolute stability and complete synchronization in a class of neural fields models. SIAM J. Appl. Math. 2008, 61: 205–250.

Faugeras O, Veltz R, Grimbert F: Persistent neural states: stationary localized activity patterns in nonlinear continuous n-population, q-dimensional neural networks. Neural Comput. 2009, 21: 147–187. 10.1162/neco.2009.12-07-660

Veltz R, Faugeras O: Local/global analysis of the stationary solutions of some neural field equations. SIAM J. Appl. Dyn. Syst. 2010,9(3):954–998. http://link.aip.org/link/?SJA/9/954/1 http://link.aip.org/link/?SJA/9/954/1 10.1137/090773611

Atay FM, Hutt A: Stability and bifurcations in neural fields with finite propagation speed and general connectivity. SIAM J. Appl. Math. 2005,65(2):644–666.

Hutt A: Local excitation-lateral inhibition interaction yields oscillatory instabilities in nonlocally interacting systems involving finite propagation delays. Phys. Lett. A 2008, 372: 541–546. 10.1016/j.physleta.2007.08.018

Hutt A, Atay F: Effects of distributed transmission speeds on propagating activity in neural populations. Phys. Rev. E 2006,73(021906):1–5.

Coombes S, Venkov N, Shiau L, Bojak I, Liley D, Laing C: Modeling electrocortical activity through local approximations of integral neural field equations. Phys. Rev. E 2007,76(5):51901.

Bressloff P, Kilpatrick Z: Nonlocal Ginzburg-Landau equation for cortical pattern formation. Phys. Rev. E 2008,78(4):1–16. 41916 41916

Faye G, Faugeras O: Some theoretical and numerical results for delayed neural field equations. Physica D 2010,239(9):561–578. 10.1016/j.physd.2010.01.010

Ermentrout, G., Cowan, J.: Large scale spatially organized activity in neural nets. SIAM J. Appl. Math. 1–21 (1980) Ermentrout, G., Cowan, J.: Large scale spatially organized activity in neural nets. SIAM J. Appl. Math. 1–21 (1980)

Engel, K., Nagel, R.: One-Parameter Semigroups for Linear Evolution Equations, vol. 63. Springer (2001) Engel, K., Nagel, R.: One-Parameter Semigroups for Linear Evolution Equations, vol. 63. Springer (2001)

Arino, O., Hbid, M., Dads, E.: Delay Differential Equations and Applications. Springer (2006) Arino, O., Hbid, M., Dads, E.: Delay Differential Equations and Applications. Springer (2006)

Hale, J., Lunel, S.: Introduction to Functional Differential Equations. Springer Verlag (1993) Hale, J., Lunel, S.: Introduction to Functional Differential Equations. Springer Verlag (1993)

Wu, J.: Theory and Applications of Partial Functional Differential Equations. Springer (1996) Wu, J.: Theory and Applications of Partial Functional Differential Equations. Springer (1996)

Diekmann, O.: Delay Equations: Functional-, Complex-, and Nonlinear Analysis. Springer (1995) Diekmann, O.: Delay Equations: Functional-, Complex-, and Nonlinear Analysis. Springer (1995)

Yosida, K.: Functional Analysis. Classics in Mathematics (1995). Reprint of the sixth (1980) edition Yosida, K.: Functional Analysis. Classics in Mathematics (1995). Reprint of the sixth (1980) edition

Hutt, A.: Finite propagation speeds in spatially extended systems. In: Complex Time-Delay Systems: Theory and Applications, p. 151 (2009) Hutt, A.: Finite propagation speeds in spatially extended systems. In: Complex Time-Delay Systems: Theory and Applications, p. 151 (2009)

Bátkai, A., Piazzera, S.: Semigroups for Delay Equations. AK Peters, Ltd. (2005) Bátkai, A., Piazzera, S.: Semigroups for Delay Equations. AK Peters, Ltd. (2005)

Kato, T.: Perturbation Theory for Linear Operators. Springer (1995) Kato, T.: Perturbation Theory for Linear Operators. Springer (1995)

Breda, D., Maset, S., Vermiglio, R.: TRACE-DDE: a tool for robust analysis and characteristic equations for delay differential equations. In: Topics in Time Delay Systems, pp. 145–155 (2009) Breda, D., Maset, S., Vermiglio, R.: TRACE-DDE: a tool for robust analysis and characteristic equations for delay differential equations. In: Topics in Time Delay Systems, pp. 145–155 (2009)

Burton T: Stability by Fixed Point Theory for Functional Differential Equations. Dover Publications, Mineola, NY; 2006.

Jarlebring, E., Meerbergen, K., Michiels, W.: An Arnoldi like method for the delay eigenvalue problem (2010) Jarlebring, E., Meerbergen, K., Michiels, W.: An Arnoldi like method for the delay eigenvalue problem (2010)

Enculescu M, Bestehorn M: Liapunov functional for a delayed integro-differential equation model of a neural field. Europhys. Lett. 2007, 77: 68007. 10.1209/0295-5075/77/68007

Chen, J., Latchman, H.: Asymptotic stability independent of delays: simple necessary and sufficient conditions. In: Proceedings of the American Control Conference (1994) Chen, J., Latchman, H.: Asymptotic stability independent of delays: simple necessary and sufficient conditions. In: Proceedings of the American Control Conference (1994)

Chen J, Xu D, Shafai B: On sufficient conditions for stability independent of delay. IEEE Trans. Autom. Control 1995,40(9):1675–1680. 10.1109/9.412644

Acknowledgements

We wish to thank Elias Jarlebringin who provided his program for computing the CV.

This work was partially supported by the ERC grant 227747 - NERVI and the EC IP project #015879 - FACETS.

Author information

Authors and Affiliations

Corresponding authors

Additional information

Competing interests

The authors declare that they have no competing interests.

Authors’ original submitted files for images

Below are the links to the authors’ original submitted files for images.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 2.0 International License (https://creativecommons.org/licenses/by/2.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

About this article

Cite this article

Veltz, R., Faugeras, O. Stability of the stationary solutions of neural field equations with propagation delays. J. Math. Neurosc. 1, 1 (2011). https://doi.org/10.1186/2190-8567-1-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/2190-8567-1-1

.

. . Let us write the matrix associated to (see above). Then is also a compact operator with finite range and

. Let us write the matrix associated to (see above). Then is also a compact operator with finite range and  . Finally, it gives

. Finally, it gives  .

. .

. and , such that ,

and , such that ,